Course project in 151-0634-00L Perception and Learning for Robotics

Members: Zhizheng Liu and me

Lecturer: Dr. C. D. Cadena Lerma and Dr. Jen Jen Chung from Autonomous Systems Laboratory, ETH Zurich

Table of Contents

Abstract

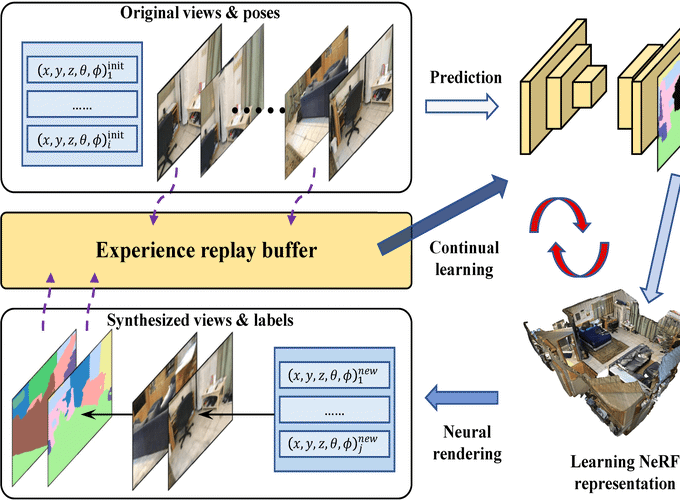

Semantic segmentation has been broadly applied in autonomous driving and other mobile applications, where trained networks are not usually updated during deployment. In contrast to other naive adaption methods like fine-tuning, continual learning can boost the model’s adaption to new environments while maintaining high generalization ability. Especially, the experience replay strategy can strike a reasonable balance between new samples and distilling information in previously observed scenes by storing or generating new data. To generate new samples, recent advances in neural rendering are applied to the generation of high quality new samples. In an extensive experimental evaluation on the ScanNet dataset, our proposed pipeline combining semantic information has been shown to be effective in generating guaranteed view-consistency images and pseudo-labels within 10 minutes. Further, the continual learning pipeline has achieved successful adaptation to real-world indoor scenes. Our method increases the segmentation performance on average by about 6.0% compared to the fixed pre-trained neural network, while effectively retaining generalization capability on the previous training data.

Video

Code

🚧 To be updated in ethz-asl/nr_semantic_segmentation!